Publications

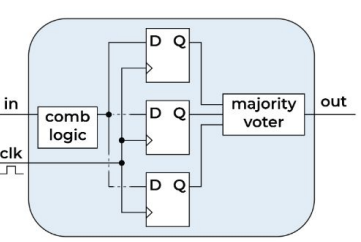

HIDA: A Hierarchical Dataflow Compiler for High-Level Synthesis

hls4ml: low latency neural network inference on FPGAs

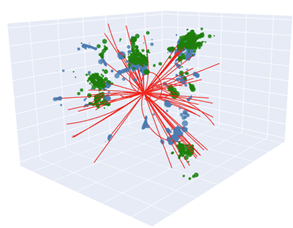

Graph Neural Network-based Track finding as a Service with ACTS

NSF HDR ML Anomaly Detection Challenge

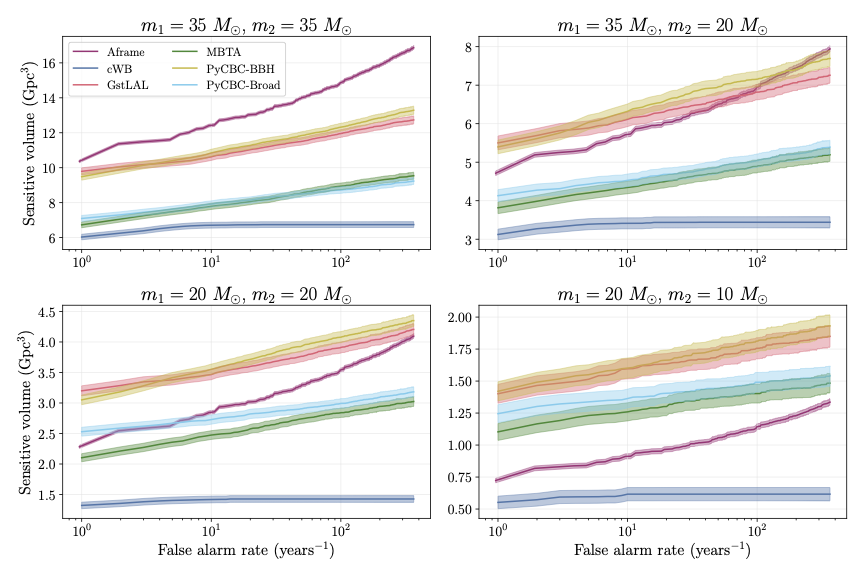

A search for binary mergers in archival LIGO data using aframe, a machine learning detection pipeline

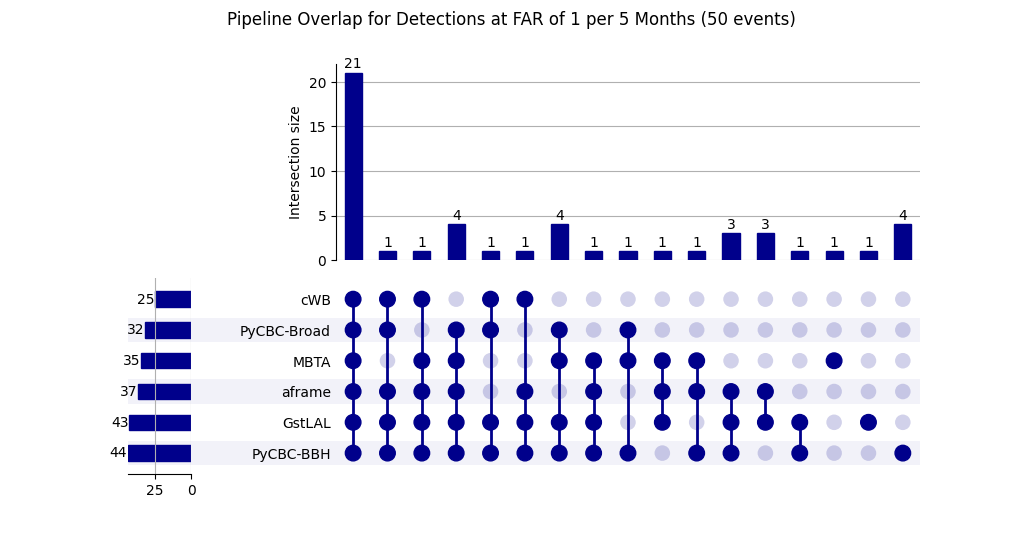

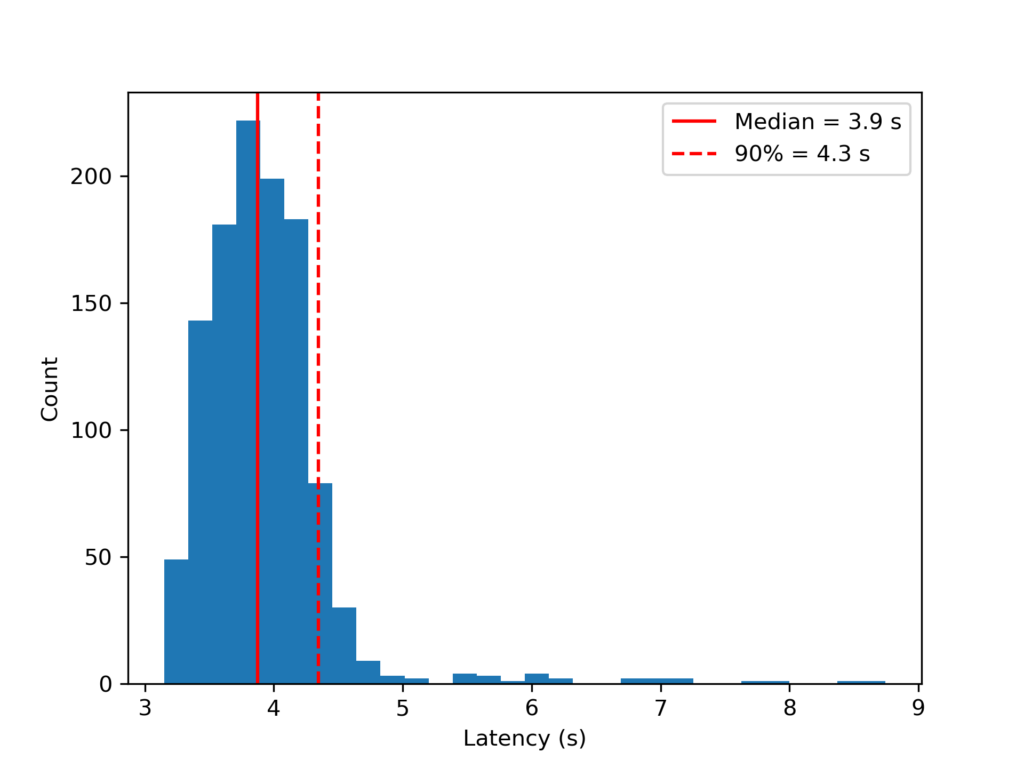

A machine-learning pipeline for real-time detection of gravitational waves from compact binary coalescences

Energy Frontier Exploration using Particle Physics and AI

A machine-learning pipeline for real-time detection of gravitational waves from compact binary coalescences

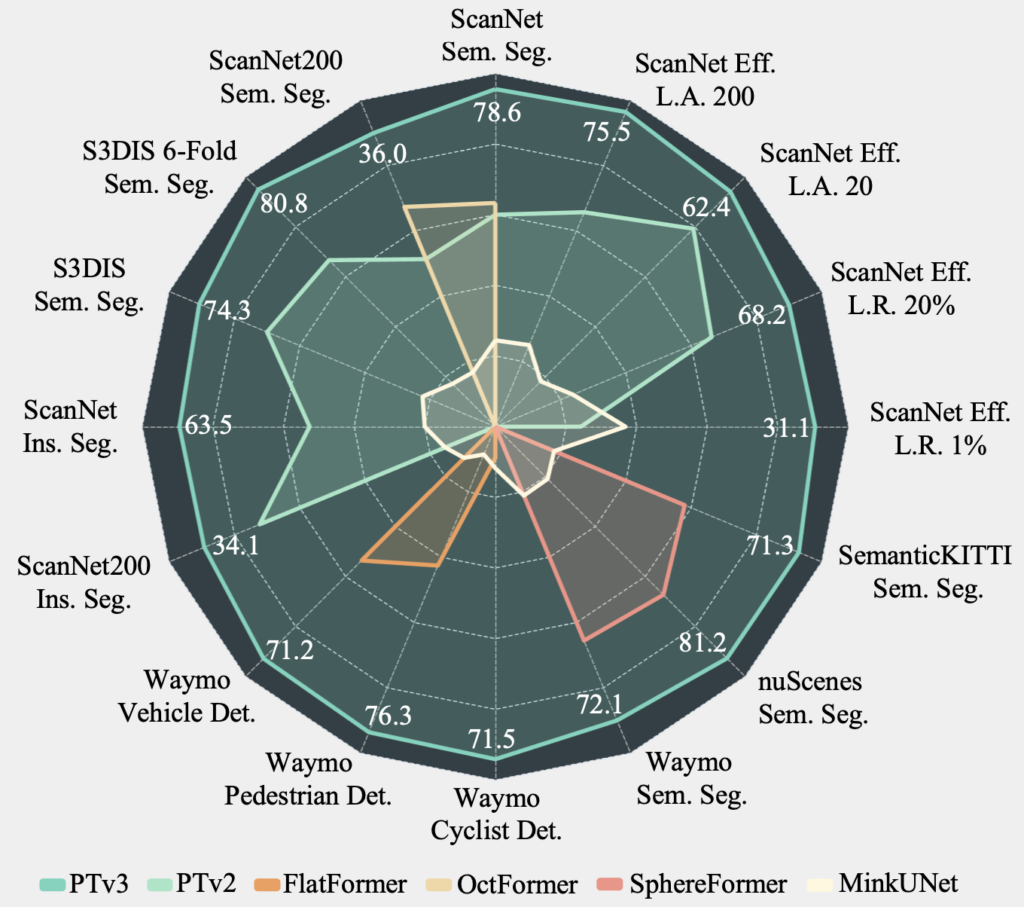

Point Transformer V3: Simpler, Faster, Stronger

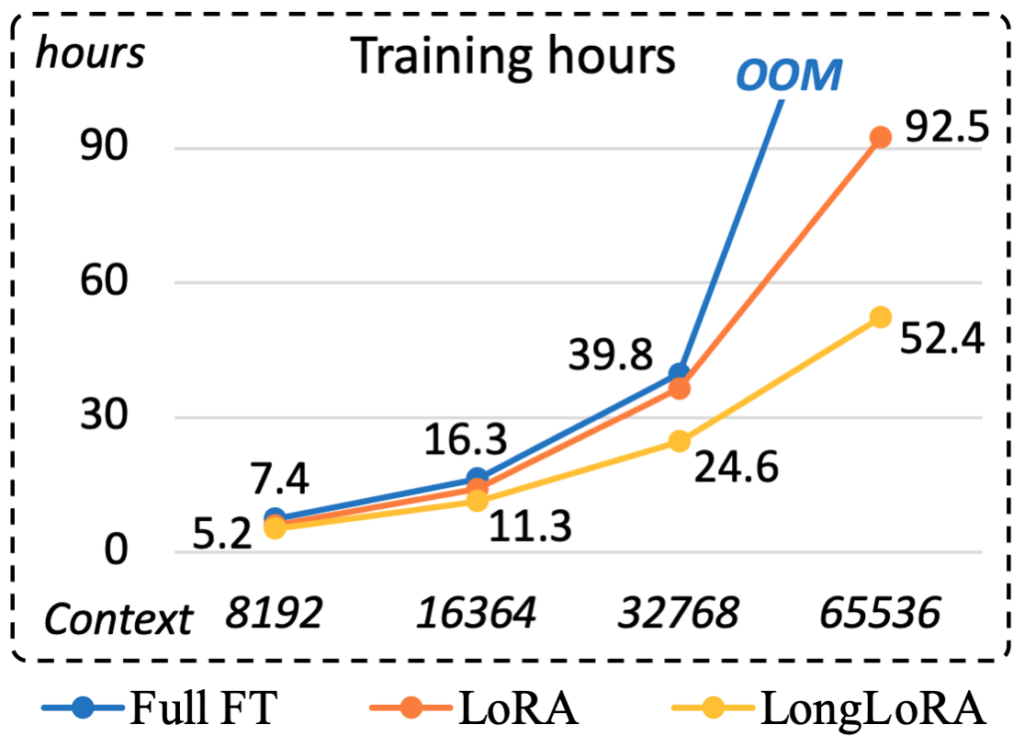

LongLoRA: Efficient Fine-tuning of Long-Context Large Language Models

MIT

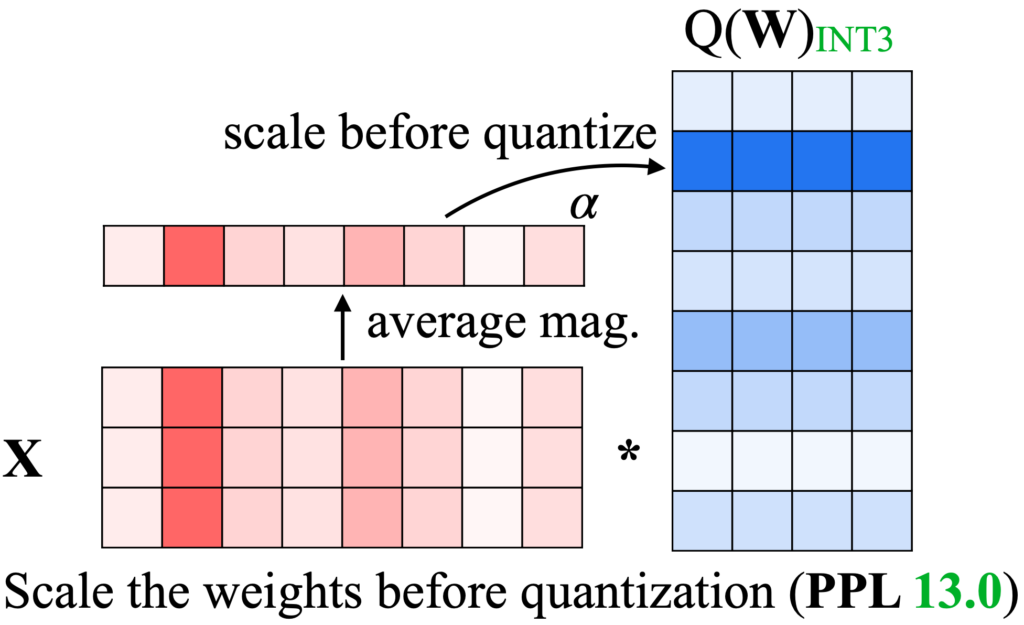

AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration

Uncertainty Quantification and Anomaly Detection with Evidential Deep Learning

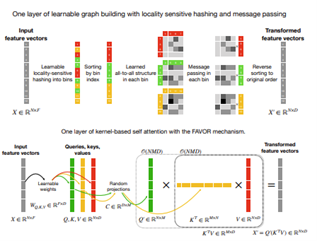

Ultra Fast Transformers on FPGAs for Particle Physics Experiments

University of Washington | MIT

Ultra Fast Transformers on FPGAs for Particle Physics Experiments

University of Washington | MIT

The Stability of Positional Encodings for Graphs

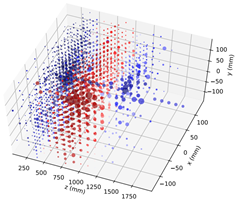

Supernova Neutrino Burst Detection with Mineral Detectors

On the Stability of Expressive Positional Encodings for Graphs

Neuroscience ML challenges using CodaBench: Decoding multi-limb trajectories from two-photon calcium imaging

High Pileup Particle Tracking with Object Condensation

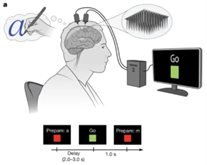

FPGA Deployment of LFADS for Real-time Neuroscience Experiments

University of Washington

Explainable AI for Interpretability of Neural Networks

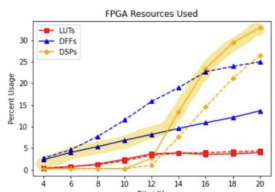

Evaluating the Quality of HLS4ML’s Basic Neural Network Implementations on FPGAs

Deep Learning Applications for Particle Physics in Tracking and Calorimetry

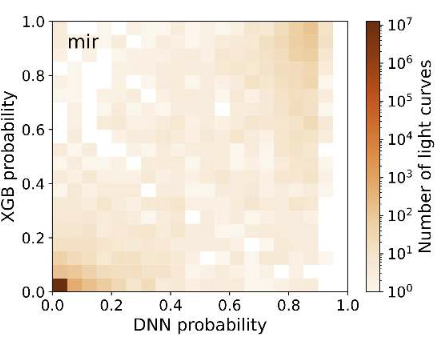

The ZTF Source Classification Project: III. A Catalog of Variable Sources

Quantifying the Efficiency of High-Level Synthesis for Machine Learning Inference

University of Washington

Low Latency Edge Classification GNN for Particle Trajectory Tracking on FPGAs

University of Washington | University of San Diego

HIDA: A Hierarchical Dataflow Compiler for High-Level Synthesis

Machine Learning Acceleration — Quantization Process and Tools Development

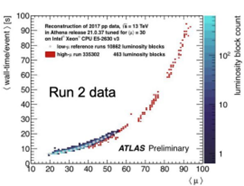

Machine Learning acceleration in the Global Event Processor of the ATLAS Trigger Update

By Zhixing Jiang | University of Washington

Accelerating CNNs on FPGAs for Particle Energy Reconstruction

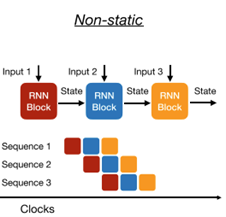

Ultra-low latency recurrent neural network inference on FPGAs for physics applications with hls4ml

University of Washington | MIT

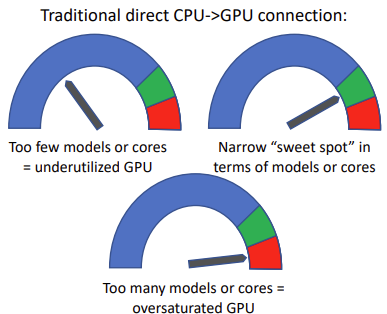

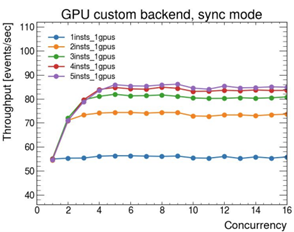

Portable Acceleration of CMS Mini-AOD Production with Coprocessors as a Service

By William Patrick Mccormack | MIT

Jets as sets or graphs: Fast jet classification on FPGAs for efficient triggering at the HL-LHC

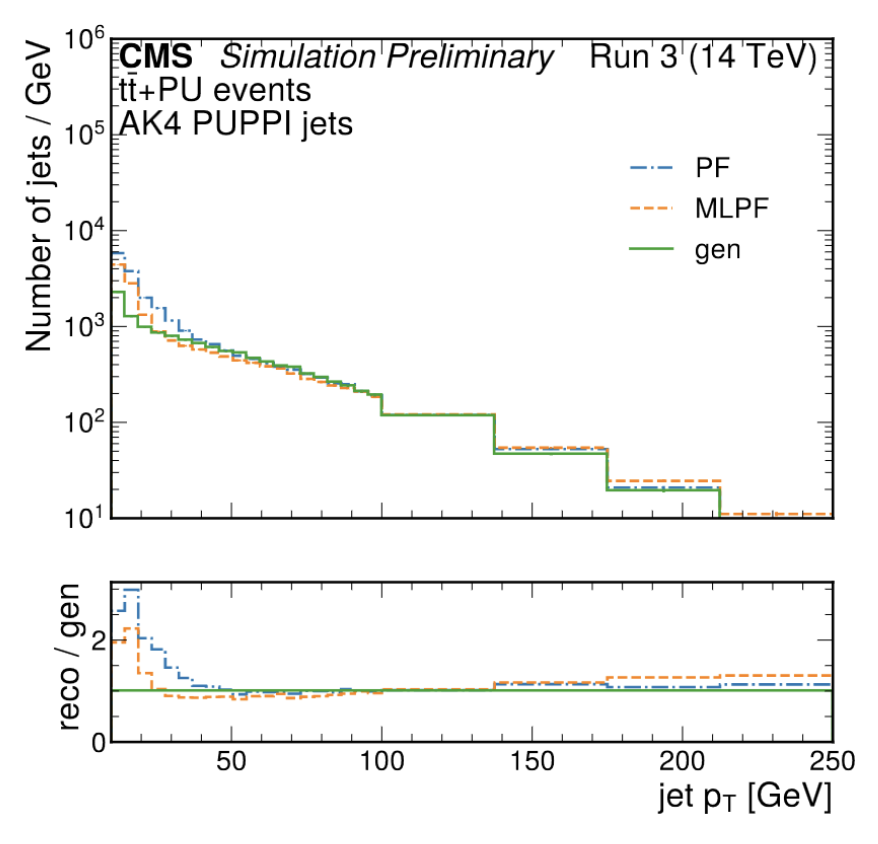

MLPF: Machine Learning for Particle Flow

Scalable neural network models and terascale datasets for particle-flow reconstruction

By Joosep Pata

Improved particle-flow event reconstruction with scalable neural networks for current and future particle detectors

ACTS as a Service

FKeras: A Sensitivity Analysis Tool for Edge Neural Networks

University of California San Diego

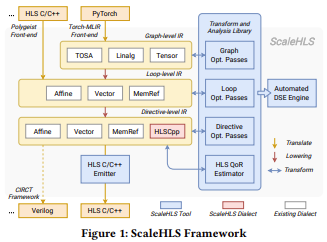

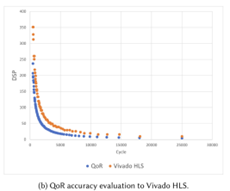

ScaleHLS, a Scalable High-level Synthesis Framework with Multi-level Transformations and Optimizations

AutoScaleDSE: A Scalable Design Space Exploration Engine for High-Level Synthesis

Track reconstruction for the ATLAS Phase-II High-Level Trigger using Graph Neural Networks on FPGAs

By Santosh Parajuli | University of Illinois at Urbana-Champaign

FPGA Deployment of LFADS for Real-time Neuroscience Experiments

University of Washington

Quantifying the Efficiency of High-Level Synthesis for Machine Learning Inference

University of Washington

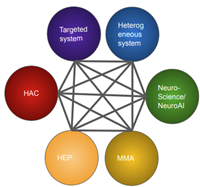

Fast ML in the NSF HDR Institute: A3D3

Community Vision, Needs, and Progress

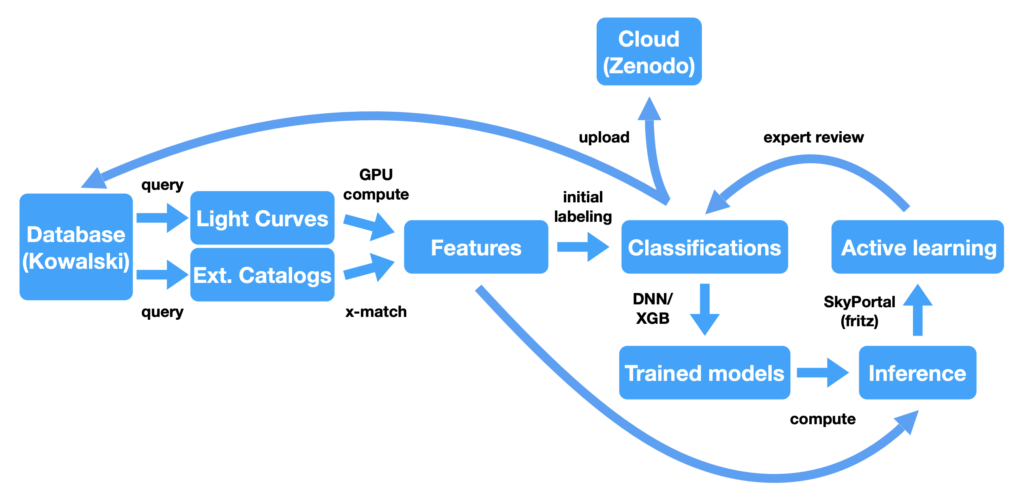

ZTF SCoPe: A Catalog of Variable Sources

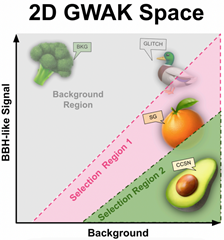

GWAK: Gravitational-Wave Anomalous Knowledge with Recurrent Autoencoders

University of Minnesota | MIT

Parameter estimation using Likelihood-free Inference

By Malina Desai | University of Minnesota | MIT

BEVFusion-R: Efficient and Deployment-Ready Camera-Radar Fusion

MIT

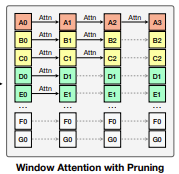

SparseViT: Revisiting Activation Sparsity for Efficient High-Resolution Vision Transformer

MIT

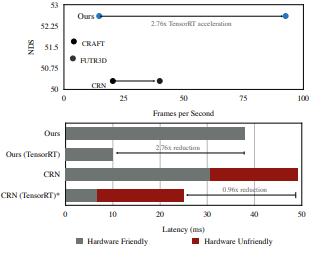

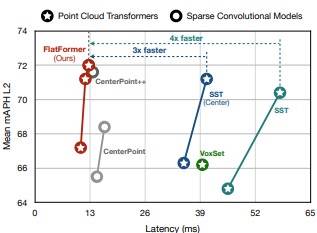

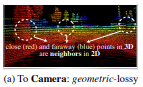

FlatFormer: Flattened Window Attention for Efficient Point Cloud Transformer

MIT

BEVFusion: Multi-Task Multi-Sensor Fusion with Unified Bird’s-Eye View Representation

MIT

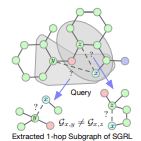

SUREL+: Moving from Walks to Sets for Scalable Subgraph-based Graph Representation Learning

Purdue University | Georgia Institute of Technology

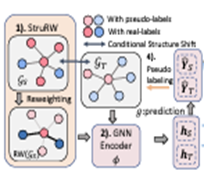

Structural Re-weighting Improves Graph Domain Adaptation

Progress towards an improved particle flow algorithm at CMS with machine learning, 2023, ACAT

Semi-supervised Graph Neural Networks for Pileup Noise Removal, 2022, NeurIPS AI4Science, EPJC

Interpretable Geometric Deep Learning via Learnable Randomness Injection, 2023, ICLR

Neighborhood-aware Scalable Temporal Network Representation Learning, 2022, LoG

Algorithm and System Co-design for Efficient Subgraph-based Graph Representation Learning, 2022, VLDB

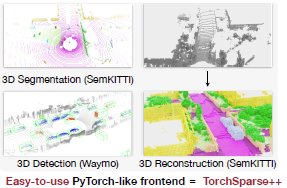

TorchSparse++: Efficient Point Cloud Engine

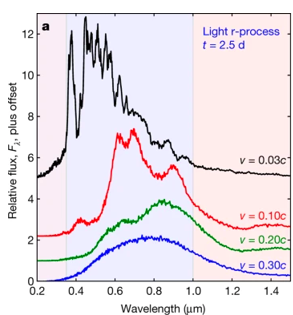

NMMA: A nuclear-physics and multi-messenger astrophysics framework to analyze binary neutron star mergers, 2023, submitted

Tutorials on ScaleHSL, FPGA, Feb. 27, 2022

Interpretable and Generalizable Graph Learning via Stochastic Attention Mechanism, 2022, ICML

Javier Duarte, Artificial Intelligence at the Edge of Particle Physics, HEP Seminar, Columbia University, November 17, 2021

Applications and Techniques for Fast Machine Learning in Science, 2021, Submitted to Front. Big Data

PointAcc: Efficient Point Cloud Accelerator, 2021, MICRO

Shih-Chieh Hsu, NSF HDR Institute: Accelerated Artificial Intelligence Algorithms for Data-Driven Discovery (A3D3), Fast ML General Meeting, October 1, 2021

ScaleHLS: A New Scalable High-Level Synthesis Framework on Multi-Level Intermediate Representation, 2022, HPCA

Searching Efficient 3D Architectures with Sparse Point-Voxel Convolution, 2020, ECCV