Heterogeneous Systems

Facilitating ML-focused science pipelines within the national distributed cyberinfrastructure ecosystem

ML-based science applications require a mix of computational resources (CPU, GPUs, FPGAs, etc.), i.e. heterogeneous compute, for best performance. These computational resources can be difficult to access by, not available to, or cost-effective to invest into for every researcher. Within the national cyberinfrastructure (CI) ecosystem, the heterogeneous computing resources are available. At the same time, they are distributed across various facilities with

Projects

Services for Optimized Network Inference on Coprocessors (SONIC)

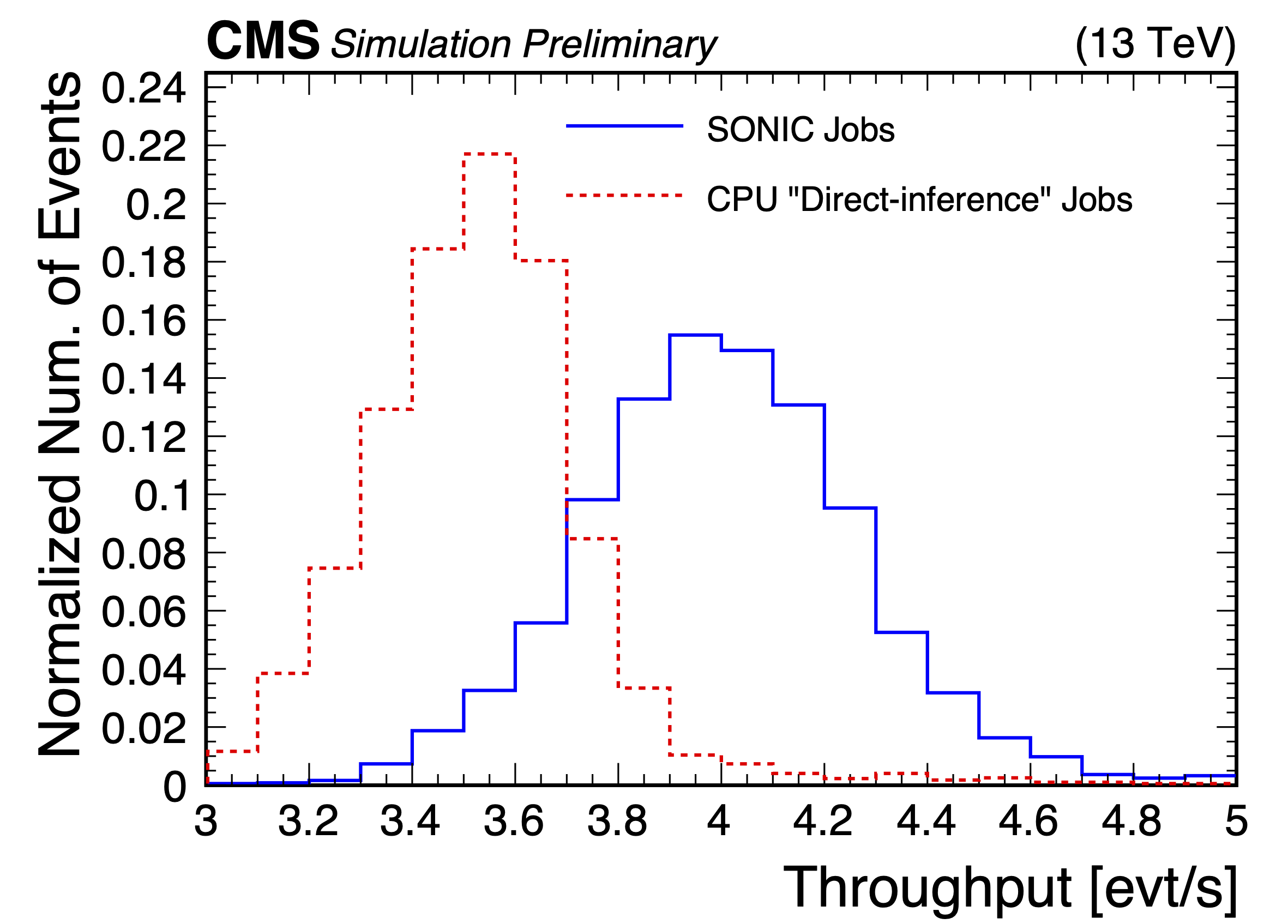

SONIC was the first attempt at investigating the as-a-Service model for Machine Learning-based portions of data analysis pipelines in large scale scientific projects. In particular, offloading the Machine Learning-based data pipelines steps to local or remote coprocessors. Offloading aforementioned steps to coprocessors has proven to improve overall throughput compared to purely running on CPU resources.

PAPER

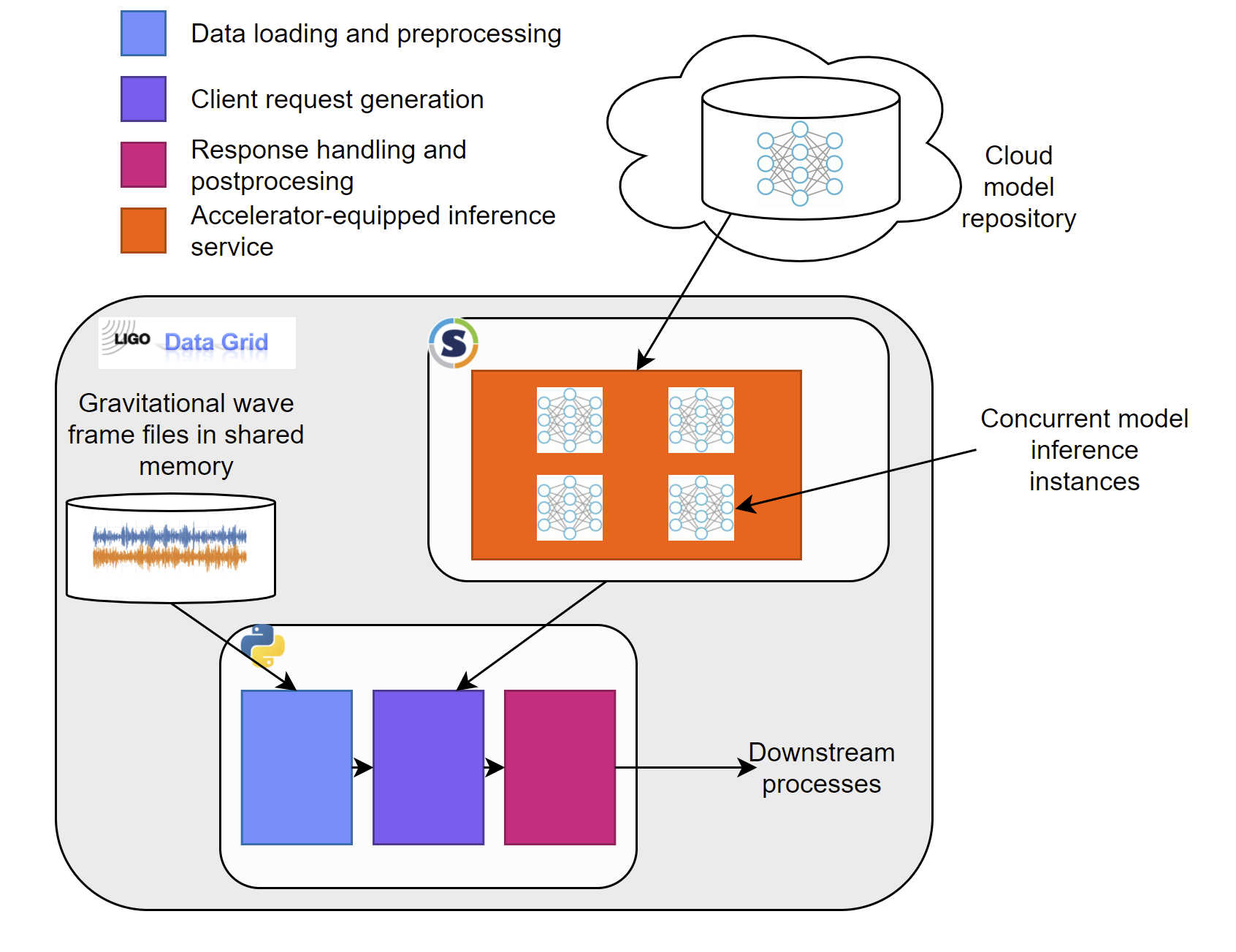

Gravitational-wave inference as a service prototype.

The goal of this project is to establish a versatile platform capable of deploying real-time machine learning algorithms within the realm of gravitational-wave science. Motivated by the paradigm of inference as a service, we aim to harness existing computing resources efficiently, ensuring real-time capabilities. We have demonstrated sub-second latencies through the implementation of distributed inference in both noise cleaning and compact binary coalescence detection pipelines, specifically tailored for gravitational-wave computing resources. This addresses not only the need for rapid analysis in gravitational-wave science but also signifies a pivotal advancement in the field by showcasing the feasibility of deploying machine learning algorithms with remarkable speed and efficiency.

For more information, please see here.